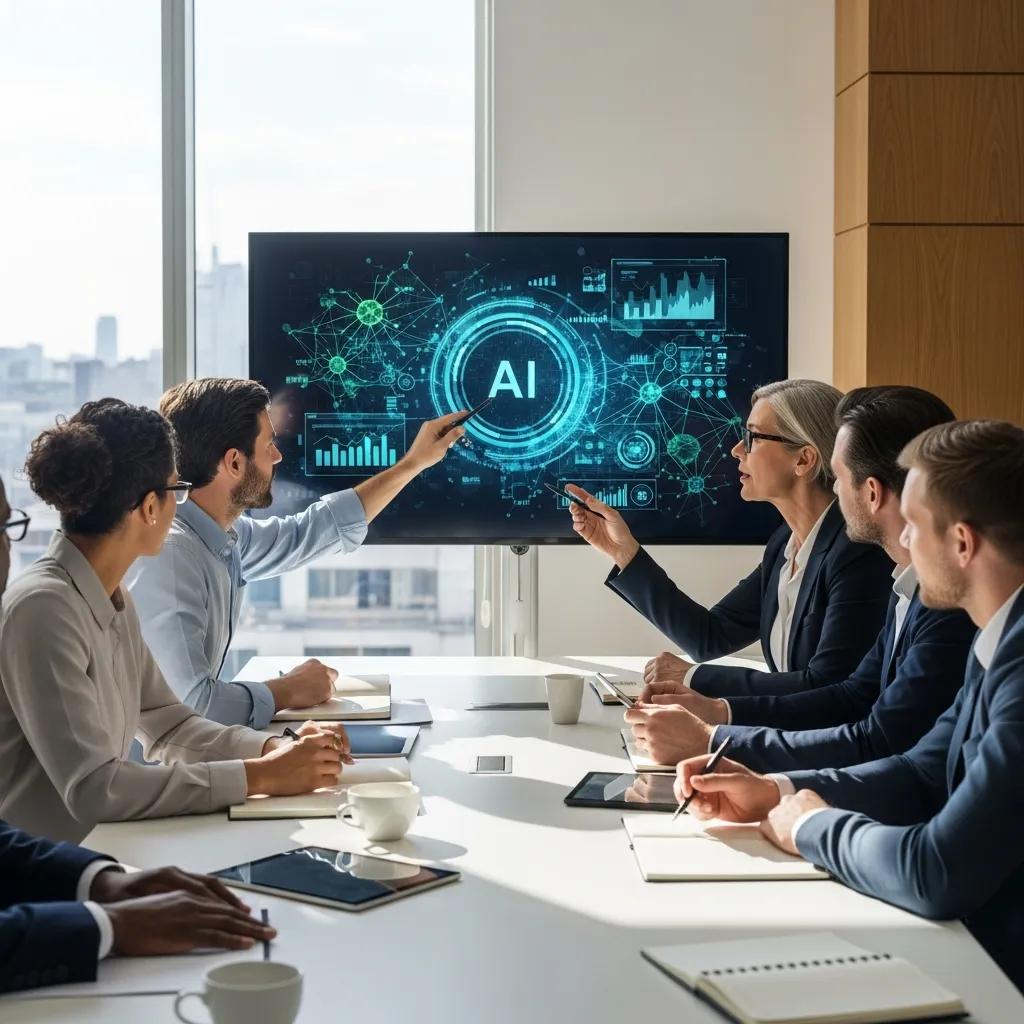

Governance Without Gridlock: How to Introduce AI Safely in Regulated Industries with Effective AI Governance

AI governance is the set of policies, roles, and technical controls that ensure AI systems operate safely, ethically, and in compliance with regulation while enabling business value. In regulated industries, effective governance prevents legal, operational, and reputational gridlock by defining decision rights, human oversight, and monitoring that keep projects moving. This article explains why AI governance is essential for regulated sectors, outlines the ethical pillars that guide implementation, maps compliance to data-security practices, and offers industry-specific solutions for finance and healthcare. You will also get practical guidance on fractional executive leadership, including the role of a Fractional Chief AI Officer, and a time-boxed adoption path SMBs can use to start delivering measurable ROI. Throughout, the focus is human-centered AI, bias mitigation, explainability, and actionable steps SMBs can take in 30/60/90 days to adopt AI responsibly while avoiding stalled approvals or cascading compliance risk.

AI Governance and Compliance in Regulated Industries

AI governance is the set of policies, roles, and technical controls that ensure AI systems operate safely, ethically, and in compliance with regulation while enabling business value. In regulated industries, effective governance prevents legal, operational, and reputational gridlock by defining decision rights, human oversight, and monitoring that keep projects moving. This article explains why AI governance is essential for regulated sectors, outlines the ethical pillars that guide implementation, maps compliance to data-security practices, and offers industry-specific solutions for finance and healthcare. You will also get practical

AI Governance and Compliance in Regulated Industries, SA Akhee, 2023

Why Is AI Governance Essential for Regulated Industries?

AI governance defines who decides, what controls are required, and how models are monitored so organizations meet legal and operational obligations without slowing innovation. Effective governance aligns model development with compliance checkpoints, audit trails, and human-in-the-loop reviews, which reduces the risk of fines and operational failures. For regulated businesses, governance also protects reputation by preventing biased or opaque decisions that can erode customer trust. The next section lists the core reasons governance is non-negotiable for regulated sectors and shows the direct consequences of ignoring these controls.

AI governance matters for three primary reasons:

- Legal Compliance: Ensures models meet regulatory requirements and documentation obligations.

- Operational Resilience: Prevents model drift, supply-chain failures, and security incidents that disrupt services.

- Reputational Protection: Reduces bias and privacy harms that damage stakeholder trust.

These legal, operational, and reputational drivers lead directly into the specific risks organizations face when adopting AI.

What Are the Key Risks of AI Adoption in Regulated Sectors?

Key risks from AI adoption include data breaches exposing sensitive records, algorithmic bias producing discriminatory outcomes, regulatory non-compliance due to inadequate documentation, and third-party model risk from vendors with opaque practices. Each of these risks can cause direct financial penalties, operational downtime, and customer harm, especially when PHI or financial transaction data are involved. To mitigate these risks, organizations must pair technical controls—encryption, access management, and logging—with governance artifacts like model cards and vendor risk assessments. Addressing these risk types early reduces remediation costs and accelerates safe deployment.

Clear governance also prevents vendor-related surprises by requiring transparency from third-party providers, which in turn feeds into validation and monitoring processes that stop model drift. That process perspective leads into how governance mechanisms specifically avoid gridlock between legal and product teams.

How Does AI Governance Prevent Operational and Legal Gridlock?

AI governance prevents gridlock by establishing clear policies, decision rights, and streamlined approval flows that balance speed with oversight. A practical mechanism is a policy-to-oversight pipeline: a use-case policy defines acceptable risk, human-in-the-loop checkpoints enforce decisions, and automated monitoring triggers remediation without full work stoppage. By assigning escalation paths and documentation requirements up front, governance shortens review cycles and keeps deployments moving. For SMBs, lightweight templates—standardized model cards, testing scripts, and vendor questionnaires—provide the practical artifacts teams need to approve pilots quickly while preserving compliance.

These operational controls also set the stage for ethical implementation, where fairness and explainability become enforceable elements of the governance framework.

What Are the Pillars of Ethical AI Implementation in Regulated Industries?

Ethical AI implementation rests on a compact set of pillars—Fairness, Transparency, Accountability, Privacy, and Security—that together ensure systems behave predictably and justifiably. Each pillar translates into tangible practices: fairness requires bias testing and mitigation, transparency needs model documentation and explainability artifacts, accountability demands roles, escalation routes, and remediation plans, privacy mandates data minimization and consent management, and security requires encryption and incident readiness. Aligning these pillars with governance policies creates a defensible, human-centered approach that supports both regulators and end users. Below is a practical breakdown of each pillar and how organizations can operationalize them.

The five ethical pillars and one-line descriptions:

- Fairness: Detect and mitigate disparate impacts through bias audits and balanced sampling.

- Transparency: Produce model cards and decision logs that explain system behavior to stakeholders.

- Accountability: Assign ownership for decisions and remediation, including escalation procedures.

- Privacy: Minimize data collection and apply strong de-identification and consent management.

- Security: Implement encryption, access controls, and logging to prevent data and model compromise.

Applying these pillars in day-to-day workflows requires specific controls like bias tests and explainability tools, which we describe next.

How Do Fairness, Transparency, and Accountability Shape Ethical AI?

Fairness, transparency, and accountability work together to make systems auditable and remediable. Fairness begins with data audits and pre-deployment bias testing that identify skew and disparate impact; transparency follows by producing artifacts such as model cards and feature importance summaries that show how decisions are made. Accountability assigns owners for model outcomes and establishes remediation plans and reporting duties, ensuring issues are traced and fixed. Practically, a pre-deployment pipeline might include data fairness checks, mandatory documentation, and an assigned remediation lead, creating a repeatable control loop that supports audit readiness and continuous improvement.

Those controls form the basis for bias-mitigation and explainability strategies that keep models both effective and defensible.

What Strategies Mitigate Bias and Ensure Explainable AI?

Bias mitigation combines data-centric and model-centric techniques: reweighting or resampling training data, adding fairness constraints during training, and applying post-hoc adjustments to scores. Explainability techniques like SHAP summaries, LIME, or distilled rule-sets produce human-interpretable outputs for regulators and clinicians, while model cards summarize intended use, evaluation metrics, and known limitations. For SMBs, low-overhead options include automated fairness checks integrated into CI pipelines and standardized explainability summaries that accompany every deployment. Regular monitoring schedules then detect bias drift so teams can retrain or recalibrate models before harms accumulate.

These tactical approaches to bias and explainability feed directly into compliance alignment with data security and regulation.

How Do AI Compliance Strategies Align with Data Security and Regulations?

Compliance strategies align with data-security practices by linking regulatory obligations—such as documentation and risk classification—to technical controls like encryption, access control, and audit logging. Mapping regulation to control requirements makes compliance actionable: for example, if a regulation mandates record-keeping, the governance requirement becomes robust audit logs and immutable model cards. For SMBs, prioritized controls—data minimization, role-based access, and continuous monitoring—deliver the most compliance leverage with limited resources. The table below compares major regulations and frameworks across scope, required controls, and SMB impact to help teams prioritize work.

| Regulation / Framework | Scope | Required Controls | SMB Impact |

|---|---|---|---|

| EU AI Act | Risk-based AI classification | Risk assessments, documentation, high-risk controls | Significant documentation burden for high-risk systems |

| NIST AI RMF | Risk management guidance | Inventory, governance, continuous monitoring | Practical roadmap for risk-based controls |

| GDPR / CCPA | Personal data protection | Consent, data subject rights, data minimization | Requires privacy-by-design for ML pipelines |

| HIPAA | Protected health information | Access controls, audit logs, encryption | High compliance bar for healthcare AI |

| State AI Laws (e.g., disclosure rules) | Varies by state | Transparency and vendor oversight | Additional disclosure requirements for customer-facing AI |

This mapping shows how regulatory requirements translate to concrete security and governance tasks SMBs can adopt to achieve compliance while limiting overhead. Next, we summarize the critical regulations and immediate priorities for SMBs.

Artificial Intelligence Regulation: A Framework for Governance

This article develops a conceptual framework for regulating Artificial Intelligence (AI) that encompasses all stages of modern public policy-making, from the basics to a sustainable governance. Based on a vast systematic review of the literature on Artificial Intelligence Regulation (AIR) published between 2010 and 2020, a dispersed body of knowledge loosely centred around the “framework” concept was organised, described, and pictured for better understanding. The resulting integrative framework encapsulates 21 prior depictions of the policy-making process, aiming to achieve gold-standard societal values, such as fairness, freedom and long-term sustainability. This challenge of integrating the AIR literature was matched by the identification of a structural common ground among different approaches. The AIR framework results from an effort to identify and later analytically deduce synthetic, and generic tool for a country-specific, stakeholder-aware analysis of AIR matters. Theories and principles as diverse as Agile and Ethics were combined in the “AIR framework”, which provides a conceptual lens for societies to think collectively and make informed policy decisions related to what, when, and how the uses and applications of AI should be regulated. Moreover, the AIR framework serves as a theoretically sound starting point for endeavours related to AI regulation, from legislation to research and development. As we know, the (potential) impacts of AI on society are immense, and therefore the discourses, social negotiations, and applications of this technology should be guided by common grounds based on contemporary governance techniques, and social values legitimated via dialogue and scientific research.

Artificial intelligence regulation: a framework for governance, CD Dos Santos, 2021

What Are the Critical AI Regulations Impacting Regulated Industries?

Critical regulations affecting AI include the EU AI Act with its risk tiers, NIST AI RMF as a practical risk-management scaffold, privacy laws like GDPR and CCPA that regulate data handling, and healthcare rules such as HIPAA that impose strict PHI controls. For SMBs, the immediate priority is to classify use cases by risk, identify applicable privacy and sector-specific laws, and create a minimal set of controls that satisfy documentation and security needs. Treating NIST AI RMF as an implementation roadmap helps translate high-level obligations into actionable tasks like inventories, model documentation, and monitoring plans. Establishing these priorities prevents surprises during audits and speeds approvals.

These compliance priorities require technical practices—detailed next—that secure data and enable audit readiness.

How Can SMBs Implement Data Security Best Practices for AI?

SMBs can implement effective AI security with a focused checklist: classify data and minimize collection, enforce role-based access and encryption at rest and in transit, maintain immutable audit logs and model cards, vet vendors for transparency, and implement incident response plans tailored to ML incidents. Low-cost tools and managed services can automate logging and encryption, while simple governance templates standardize vendor questionnaires and model documentation. Regular tabletop exercises and incident simulations ensure teams know how to react to data or model breaches. Together, these steps provide a defensible posture without requiring enterprise-only budgets.

Key immediate actions SMBs should take include the following checklist:

- Classify and minimize: Only collect necessary data and label sensitivity levels.

- Access controls: Use role-based permissions and least privilege for model pipelines.

- Encryption and logging: Encrypt data and keep immutable logs for audit trails.

- Vendor vetting: Require transparency and documentation from third-party models.

Following this checklist enables secure, compliant AI deployments and moves the organization toward industry-specific governance patterns.

What Industry-Specific AI Governance Challenges Exist and How Can They Be Solved?

Different regulated sectors face distinct governance challenges: financial services must manage model risk, fairness, and transaction security; healthcare must protect PHI, validate clinical performance, and ensure patient safety. Each industry benefits from governance patterns that combine technical controls, audit artifacts, and role-based responsibilities tailored to sector needs. Practical SMB solutions focus on lightweight but rigorous practices—model validation checklists for finance and clinical validation protocols for healthcare—that deliver compliance without organizational bloat. The table below compares finance and healthcare risks and proposed SMB mitigations to illustrate concrete approaches.

| Industry | Primary Risk / Requirement | Practical SMB Solution |

|---|---|---|

| Financial Services | Model risk, transaction integrity, regulatory audits | Model risk assessments, versioned audit trails, vendor validation |

| Healthcare | PHI protection, clinical validation, patient safety | Data de-identification, clinical testing protocols, consent logging |

| Cross-industry | Third-party opacity, bias, explainability | Mandatory model cards, periodic bias audits, human-in-loop checks |

This side-by-side comparison highlights how targeted controls map to specific compliance needs and helps SMBs choose pragmatic starting points. Below are short, anonymized examples of outcome-focused governance improvements.

How Does AI Governance Address Financial Services Compliance?

In financial services, governance emphasizes model risk management, audit readiness, and transaction security to satisfy regulators and customers. SMBs should implement model validation steps including backtesting, documentation of training data provenance, and clear escalation paths for detected performance issues. Vendor oversight is crucial—contracts must require transparency and testability, and audit trails should record model versions and approvals. When these controls are in place, finance teams can deploy models for fraud detection or credit scoring with reduced regulatory pushback and faster approvals, which maintains operational continuity and customer trust.

Applying these governance steps often shortens approval cycles and reduces remediation costs, making financial AI projects both faster and more sustainable.

What Are Healthcare AI Regulations and Patient Safety Considerations?

Healthcare AI governance centers on HIPAA-compliant handling of PHI, demonstrable clinical validation, and continuous safety monitoring for patient outcomes. SMBs deploying clinical decision support should document data provenance, obtain appropriate consent, and run prospective or retrospective validation studies that demonstrate safety and effectiveness. Lightweight clinical oversight roles—such as an assigned clinical reviewer and a documented remediation pathway—can make deployments defensible under inspection. Prioritizing logging, consent handling, and model performance tracking protects patients and reduces the risk of costly interventions or regulatory penalties.

These healthcare-specific controls illustrate how governance must be tightly integrated with clinical workflows to preserve patient safety and regulatory compliance. Real-world anonymized outcomes show measurable benefits when governance is applied correctly.

- SMBs that applied governance patterns saw anonymized outcome improvements such as a +35% increase in average order value (AOV) when biased pricing models were corrected.

- Another anonymized program reports 95% faster ad production following governance improvements that standardized model inputs and approval workflows.

These anonymized stats demonstrate that governance not only reduces risk but also unlocks measurable ROI and operational speed.

How Does a Fractional Chief AI Officer Enable Governance Without Gridlock?

A Fractional Chief AI Officer (fCAIO) provides executive-level AI leadership on a flexible basis, combining strategy, governance setup, and operational oversight without the cost of a full-time hire. The fCAIO role clarifies decision rights, defines governance artifacts such as model cards and audit trails, and coordinates cross-functional stakeholders to prevent bottlenecks between legal, security, and product teams. For SMBs, a fractional leader accelerates time-to-value by operationalizing compliance, implementing monitoring, and measuring ROI so projects move from pilot to production swiftly. Below is a comparison of service types to help SMBs decide between full-time, fractional, and short engagements.

| Service Type | Role / Responsibility | SMB Benefit / Time-to-Value |

|---|---|---|

| Full-time CAIO | End-to-end AI strategy and leadership | Deep continuity, higher cost, slower hire cycle |

| Fractional CAIO (fCAIO) | Strategic governance, oversight, rapid program setup | Executive skill at lower cost, faster deployment |

| AI Opportunity Blueprint™ | Time-boxed discovery and roadmap | Rapid prioritization and decision-ready outcomes |

Understanding these alternatives helps SMBs choose an approach that balances expertise, budget, and speed. The next subsection outlines direct benefits of fractional engagements for SMBs.

A Five-Layer Framework for AI Governance: Integrating Regulation, Standards, and Certification

The governance of artificial intelligence (AI) systems requires a structured approach that connects high-level regulatory principles with practical implementation. Existing frameworks lack clarity on how regulation

A five-layer framework for AI governance: integrating regulation, standards, and certification, A Agarwal, 2025

What Are the Benefits of Fractional CAIO Services for SMBs?

Fractional CAIO services deliver executive oversight, governance design, and hands-on guidance that accelerate compliant AI adoption while keeping costs predictable. SMBs gain access to strategic decision-making—policy design, vendor oversight, and ROI measurement—without committing to a full-time executive. This model shortens approval cycles by embedding governance into development workflows and provides training and change management to increase adoption. Many SMBs experience measurable ROI in under 90 days when fractional leadership focuses on high-impact, low-risk pilots and clear metrics, which supports sustainable scaling.

These operational advantages naturally lead to time-boxed engagements that produce quick, decision-ready roadmaps and prioritized initiatives.

How Does the AI Opportunity Blueprint™ Accelerate Safe AI Adoption?

The AI Opportunity Blueprint™ is a focused, time-boxed engagement that produces a prioritized, compliance-aware roadmap for AI adoption in just 10 days and is offered at a price of $5,000. In a condensed discovery format, the Blueprint™ assesses readiness, prioritizes use cases, identifies regulatory touchpoints, and produces decision-ready recommendations that align governance, privacy, and security requirements with business value. For SMBs, this short engagement reduces friction between idea and implementation by delivering a clear list of pilots, necessary controls, and measurable outcomes. A time-boxed Blueprint™ combined with fractional leadership creates a practical path from assessment to production without bureaucratic delay.

After a Blueprint™ or fractional engagement, teams are equipped to implement the roadmap and measure early ROI quickly.

What Is Your Actionable Roadmap to Compliant and Responsible AI Adoption?

An actionable roadmap for SMBs should follow a clear 30/60/90 day cadence: rapid assessment and prioritization, pilot implementation with governance artifacts, and expansion with monitoring and training. The roadmap balances quick wins—low-risk, high-value pilots—with foundational governance steps like model cards, bias tests, and incident response. Training and change management are scheduled from day one to ensure adoption and minimize employee stress. Below is a concise numbered roadmap that SMBs can use to begin implementing compliant and responsible AI.

Follow this step-by-step roadmap to get started:

- Days 0–30: Assess & Prioritize — Conduct a rapid AI readiness and risk assessment; classify use cases by risk and value.

- Days 31–60: Pilot & Govern — Launch a low-risk pilot with model cards, bias tests, access controls, and vendor due diligence.

- Days 61–90: Monitor & Scale — Implement monitoring, incident response, and training; expand pilots that show measurable ROI.

This timeline creates early momentum while establishing the governance artifacts necessary for audit readiness and sustainable scaling. The next subsections provide concrete actions for immediate implementation and ongoing support.

How Can SMBs Start Implementing AI Governance Today?

SMBs can start by appointing a governance lead, running a rapid 2–5 day inventory of data and models, and selecting one high-value, low-risk pilot to operationalize governance artifacts. Create basic templates—model card, vendor questionnaire, and bias test checklist—and require them for every pilot. Use affordable tooling for logging and access control and set up a monitoring cadence with weekly reviews during pilot stages. These steps produce immediate control and transparency while keeping teams focused and reducing approval delays.

Starting with a single governed pilot builds momentum and creates reusable artifacts for future projects, which links directly to necessary ongoing support.

What Ongoing Support and Training Ensure Sustainable AI Governance?

Sustainable governance depends on periodic reviews, role-based training, and access to external expertise for complex compliance issues. Establish a quarterly governance review cadence that revisits risk classifications and monitors bias and performance metrics. Deliver role-specific training tied to workflows—developers need CI/CD governance practices while business users need decision-interpretation training—to increase acceptance and reduce stress. For complex or evolving regulatory needs, fractional CAIO check-ins and short, time-boxed engagements such as the AI Opportunity Blueprint™ provide expert guidance without full-time hires.

If you want structured, human-centered help moving from assessment to measurable ROI, consider booking a discovery conversation or exploring a time-boxed AI Opportunity Blueprint™ and fractional CAIO support to prioritize compliant pilots and accelerate adoption.

Frequently Asked Questions

What role does human oversight play in AI governance?

Human oversight is crucial in AI governance as it ensures that automated systems operate within ethical and legal boundaries. It involves having designated individuals or teams responsible for monitoring AI outputs, making decisions based on those outputs, and intervening when necessary. This oversight helps mitigate risks such as algorithmic bias and ensures compliance with regulations. By incorporating human judgment into the AI decision-making process, organizations can enhance accountability and maintain stakeholder trust, ultimately leading to more responsible AI deployment.

How can organizations measure the effectiveness of their AI governance?

Organizations can measure the effectiveness of their AI governance through various metrics, including compliance audit results, incident response times, and stakeholder feedback. Key performance indicators (KPIs) such as the frequency of bias incidents, the speed of model deployment, and the number of successful audits can provide insights into governance effectiveness. Additionally, regular reviews and assessments of governance frameworks can help identify areas for improvement, ensuring that the governance processes remain robust and aligned with evolving regulations and ethical standards.

What are the common challenges faced by SMBs in AI governance?

Small and medium-sized businesses (SMBs) often face challenges in AI governance, including limited resources, lack of expertise, and difficulty in navigating complex regulatory landscapes. These constraints can hinder their ability to implement comprehensive governance frameworks. Additionally, SMBs may struggle with establishing clear decision rights and accountability structures, which are essential for effective governance. To overcome these challenges, SMBs can leverage lightweight governance templates, seek external expertise, and prioritize high-impact initiatives that align with their business goals.

How can organizations ensure compliance with evolving AI regulations?

To ensure compliance with evolving AI regulations, organizations should adopt a proactive approach that includes continuous monitoring of regulatory changes and regular updates to their governance frameworks. Establishing a dedicated compliance team or appointing a fractional Chief AI Officer can help organizations stay informed about new requirements. Additionally, implementing flexible governance structures that can adapt to regulatory shifts, along with regular training for staff on compliance practices, will enhance an organization’s ability to meet changing legal obligations effectively.

What is the significance of model cards in AI governance?

Model cards are essential tools in AI governance as they provide a standardized way to document the characteristics, intended use, and limitations of AI models. They enhance transparency by detailing the data used for training, performance metrics, and potential biases. By creating model cards, organizations can facilitate better understanding among stakeholders, including regulators and end-users, about how AI systems operate. This documentation supports accountability and helps ensure that models are used responsibly and ethically, aligning with governance objectives.

How can organizations foster a culture of ethical AI within their teams?

Organizations can foster a culture of ethical AI by promoting awareness and education around AI ethics among all team members. This can be achieved through regular training sessions, workshops, and discussions that emphasize the importance of fairness, transparency, and accountability in AI development. Encouraging open dialogue about ethical dilemmas and providing channels for reporting concerns can also empower employees to prioritize ethical considerations in their work. Leadership commitment to ethical AI practices is crucial in setting the tone and expectations for the entire organization.

Conclusion

Implementing effective AI governance in regulated industries not only ensures compliance but also fosters innovation and operational efficiency. By prioritizing ethical practices and transparency, organizations can mitigate risks and enhance stakeholder trust. Taking the first step towards responsible AI adoption is crucial; consider exploring our tailored governance solutions today. Together, we can navigate the complexities of AI while unlocking its full potential for your business.