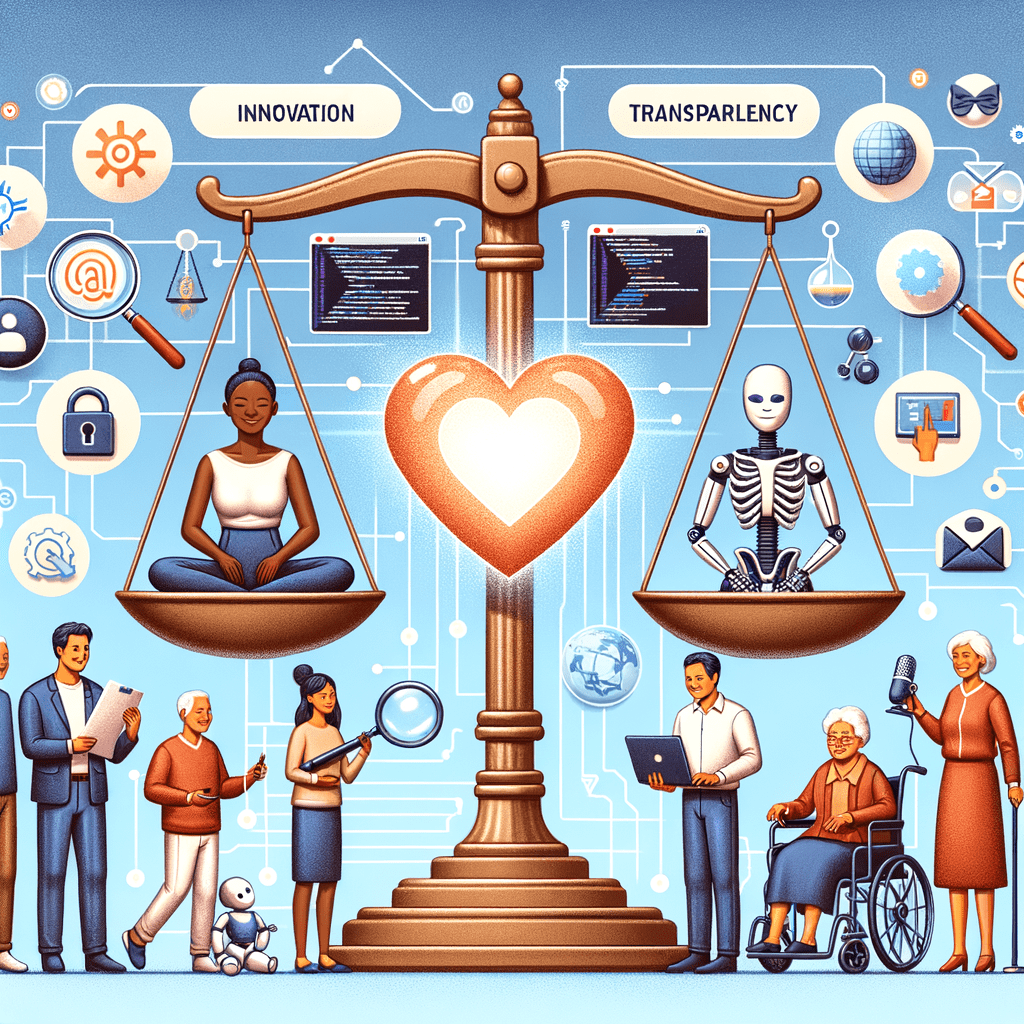

In today’s digital landscape, artificial intelligence (AI) is transforming industries and the way we work, making responsible AI implementation more critical than ever. AI’s growing power raises ethical questions about responsible use in business. This guide explores practical ways to harness AI’s positive impact, enhancing employee well-being, productivity, and work-life balance.

Why Responsible AI Implementation Matters

AI offers exciting opportunities. However, irresponsible implementation can lead to unintended consequences like biased systems and discrimination. This can harm employee morale, damage your reputation, and create legal risks.

A PwC report highlights accountability as crucial for public trust. Responsible AI implementation through clear guidelines minimizes risks, promotes employee trust, and ensures AI remains beneficial.

Key Principles of Responsible AI Implementation

Successful responsible AI implementation hinges on core guidelines embedding ethical considerations into AI systems.

- Fairness: AI systems should be designed and used fairly, avoiding bias against any employee demographics.

- Transparency and Explainability: Employees should understand how AI systems make decisions. AI should not be a mysterious “black box.”

- Privacy and Security: Responsible AI implementation involves building trustworthy AI and protecting user information throughout the AI lifecycle, as emphasized by the National Institute of Standards.

- Accountability: Clear processes should define responsibilities and actions for AI system failures.

- Human Oversight: Human approval is necessary for important decisions, especially those impacting jobs or career growth, despite AI support.

- Societal Well-being: Consider AI’s broader impact on staff, aligning applications with company values and ethical standards.

A Practical Framework for Implementing Responsible AI

This section outlines practical steps for integrating ethical principles with business goals and societal benefit in AI solutions. Demonstrating a genuine commitment to responsible artificial intelligence is essential in building trustworthy AI systems.

Deutsche Telekom’s experience shows how integrating ethical considerations into the development cycle prepares for emerging regulations and cultivates employee trust in AI.

1. Start with a Clear Strategy: Defining Roles and Responsibilities in AI Governance

Begin with a clear AI strategy and define ethical use cases. Engage AI consultants or external providers if needed. Establish specific AI oversight roles and appoint a responsible AI lead for projects.

For risk mitigation, learn from US financial service companies implementing generative AI. A structured governance approach with specific roles helps implement ethical AI principles within the development teams.

Diverse teams that include data scientists developing AI applications reduce bias and build inclusive technologies.

2. Address Bias in AI Systems

Ensure unbiased training data for AI systems. Increase team diversity to reflect various perspectives and address societal impact.

Implement safeguards from IBM’s Pillars of Trust to mitigate bias. Regular checks with bias-aware algorithms ensure balanced and fair AI systems. Encourage diverse viewpoints to identify potential biases.

3. Empower Your Workforce With AI Literacy

Equip your workforce with training and resources on responsible AI practices. Workshops on theoretical principles and practical guidelines promote transparency. Proactively address AI output and management issues.

Encourage ongoing monitoring and testing across different machine learning models to identify potential bias. Implement feedback mechanisms to spot and address potential harms before launch, similar to S&P Global’s use of NIST’s Risk Management Framework. This approach can contribute to building trustworthy AI.

4. Embrace Transparency and Open Communication in AI-driven Workflows

Foster open communication about AI implementation and use. Explain AI-driven decisions to staff and encourage questions.

IBM’s explainability principles offer a blueprint for transparency. Accurate conclusions stem from high-quality machine learning models and properly vetted training data. Using robust and reliable training data in conjunction with ethical values strengthens ai models, ensuring trustworthy AI.

5. Continuous Monitoring and Adaptation: Managing Risks in Ongoing AI Development

Continuously monitor AI systems for responsible and fair operation. Implement early problem detection mechanisms.

Adopt adaptive methods like rule-set evolution for complex processes with various AI solutions. Ongoing assessments identify vulnerabilities, ensuring accuracy, robustness, and adaptability.

Responsible AI Implementation: A Case Study

IBM’s 2020 decision to exit the facial recognition market due to bias concerns exemplifies responsible AI. Their action inspires organizations navigating AI implementation and emphasizes adaptation as technology evolves.

FAQs about responsible ai implementation

What are the 6 principles of responsible AI?

Six common principles for responsible AI are fairness, transparency, explainability, privacy, security, accountability, human oversight, and societal well-being. These principles provide guiding principles for implementing responsible ai.

How to implement AI ethically?

Ethical AI implementation starts with clear guidelines prioritizing fairness, transparency, privacy, and human oversight. Ensure diverse data, develop bias detection methods, and provide user training.

What’s one way for companies to implement responsible AI?

Empower employees by educating them on appropriate AI applications and their outputs. This insight allows them to evaluate AI’s strengths and limitations.

What are the 6 principles of Cisco’s responsible AI?

Cisco’s principles are transparency, fairness, equity, privacy, security, accountability, and human-centered AI. They offer guidance for responsible design, development, and deployment.

Responsible AI: The Way Forward

Responsible AI implementation is crucial for all businesses, especially small and mid-sized enterprises integrating AI systems. Organizations prioritizing employee well-being and business growth recognize responsible AI as vital for achieving objectives. Following guidelines like fairness and transparency unlocks AI’s potential while mitigating ethical risks.

This proactive approach builds trust in AI decisions, fostering employee happiness and efficiency. How is your business approaching this new era of responsible AI? Share your thoughts in the comments.